Reverse engineering Asustor's NAS firmware (part 2)

By Ricardo

Note: this was previously called “Running my NAS OS on a VM (part 2)” but I believe the new title is better. Also, if you haven’t read part 1, you should. Trust me.

Hey, a fun fact: I’ve started working on this part a long time ago, hence the very long delay before this got published!

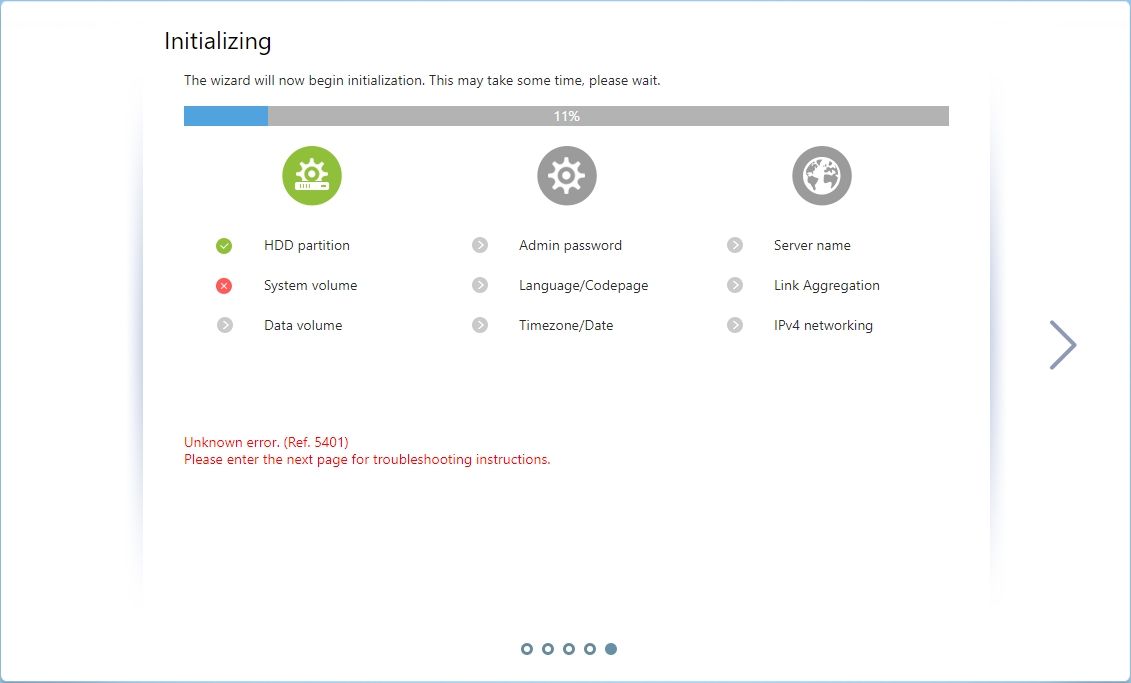

Last time I worked on this I’ve managed to get the main firmware to run on a VM by bypassing some code and patching whatever it was necessary to make it boot. This is where we stopped:

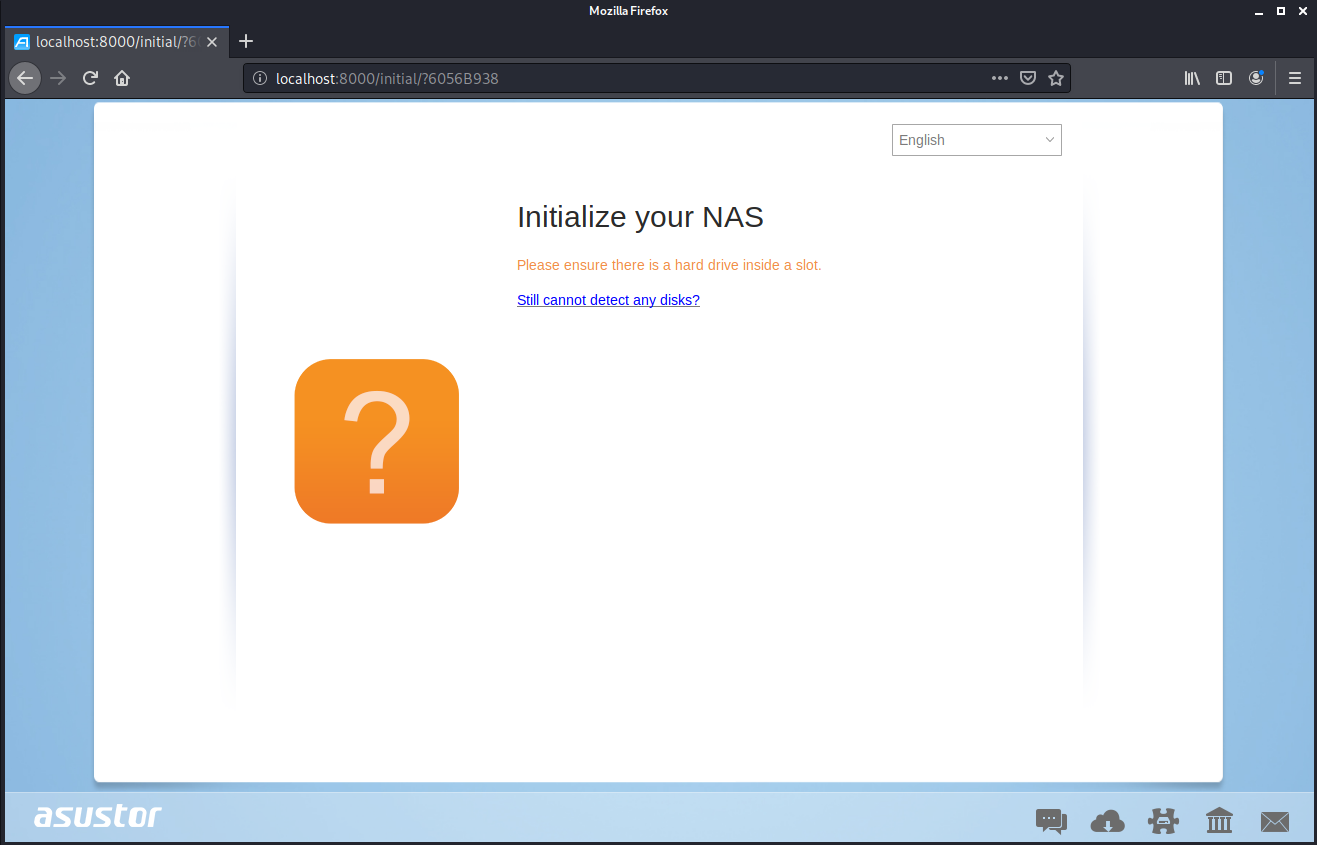

Since nothing is easy, it wouldn’t detect any disks. I honestly kinda expected this. Hey, I’m running the firmware on a very unsupported hardware and most of the things it does on the web interface is over those CGI binaries, and they need a bunch of information from the hardware itself to work. The CGI call actually helps debugging this out: we call list-disk and get an error as output. We can even see the disks getting detected by the kernel as well.

Recreating the problem

We know we can call the CGI binaries by hand if we pass the correct arguments, and we also know they’ll check the sid for a valid session ID. We patched this before and used LD_PRELOAD to avoid needing it, but for the initial CGI it does not work:

# LD_PRELOAD=./hijack.so QUERY_STRING="act=list-disk" /usr/webman/initial/initial.cgi

Content-type: text/plain; charset=utf-8

{ "success": false, "error_code": 5303, "error_msg": "the sid is not specified or invalid!" }

A patched version of libcgi.so, like we did here, did the trick though:

# cat fakecgi.sh

export QUERY_STRING='sid=-fake-cgi-token-&act=list-disk&_dc=1604790200480'

export LD_LIBRARY_PATH=$(pwd)

echo "-fake-cgi-token- 0FFFFFFF 00000000 -------- 000003E7 172.16.1.100 admin" > /tmp/fake.login

/usr/webman/initial/initial.cgi

# ./fakecgi.sh

Content-type: text/plain; charset=utf-8

{ "success": false, "error_code": 5008, "error_msg": "the nas is already initialized!" }

Ok, so, in the NAS, it works and detects it has already been initialized. I tried running the same script outside the NAS, but unfortunately it kept saying it was the wrong SID, which is very weird and unexpected. Nevertheless, we can also analyze the code to figure out what is going on.

According to the code, it revives the user session (which we have hacked once) and checks if the user is an administrative one. The way it does this is by calling a Is_Local_User_In_Administrators_Group function, which will then look at the /etc/group and check if the user is in the administrators group. That simple.

local_4c = O_CGI_AGENT::Revive_Session();

if ((local_4c != 0) || (iVar4 = Is_Local_User_In_Administrators_Group(local_5fc), iVar4 != 1)) {

O_CGI_AGENT::Print_Content_Type_Header();

printf("{ \"success\": false, \"error_code\": %d, \"error_msg\": \"%s\" }\n",0x14b7, "the sid is not specified or invalid!");

goto LAB_004038a4;

}

This explains why it won’t run outside the NAS: I don’t have the administrators group on my environment. We can bypass this by patching the CGI, the libraries it uses or simply by using LD_PRELOAD and loading a custom library that has those functions:

int _ZN11O_CGI_AGENT14Revive_SessionEi(void *this) {

return 0;

}

int _Z37Is_Local_User_In_Administrators_GroupPKc(char* param1) {

return 1;

}

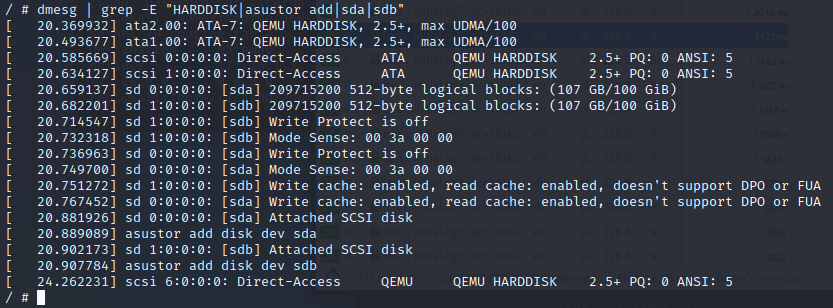

Once patched, we get at the same point as the VM: no NAS properties.

$ ./test.sh

Content-type: text/plain; charset=utf-8

{ "success": false, "error_code": 5011, "error_msg": "Can't get the nas property! (-2)" }

Now work on figuring this out can finally begin.

The NAS Properties

We got to the same issue as the VM itself, which means our efforts now are focused on figuring out those nasty NAS properties that fails to read. We need to figure out where they come from, how can we add or patch them in (or out) and how to apply such patch to the initramfs.

Looking up at initial CGI code (which is very long function btw), we get the “can’t get the nas property” error message whenever Lookup_Nas_Info fails. This function is exposed by libnasman, which is a huge library used by the NAS for many, many things.

local_4c = Lookup_Nas_Info(&local_538);

if ((int)local_4c < 0) {

printf("{ \"success\": false, \"error_code\": %d, \"error_msg\": \"%s (%d)\" }\n",0x1393, "Can\'t get the nas property!",(ulong)local_4c);

}

The argument seemed like a buffer, and passing a simple char[256] to it revealed it is indeed a buffer. Here are the contents when I call it on the NAS itself:

# ./test5

retval = 0

01 00 03 03 04 00 03 26 &

1d 1c 03 02 1f 00 11 00 &

30 01 00 00 26 00 00 00 0 &

00 00 00 00 00 00 00 00

00 01 02 03 00 00 00 00

00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00

00 10 18 00 00 00 00 01

02 00 00 00 00 00 50 01 P

10 01 20 01 00 00 00 00

00 00 00 00 00 00 10 02

20 02 30 02 00 00 00 00 0

00 00 00 00 00 00 30 30 0 0

2d 31 30 2d 31 38 2d 30 0 - 1 0 - 1 8 - 0

30 2d 30 30 2d 30 30 00 0 0 - 0 0 - 0 0

41 53 33 31 30 34 54 00 A S 3 1 0 4 T

00 00 00 00 00 00 00 00

XX XX XX XX XX XX XX XX (...)

XX XX XX XX XX XX XX XX (...)

00 00 00 00 33 2e 35 2e 1 3 . 5 .

33 2e 52 42 48 31 00 00 . 3 . R B H 1

00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00

00 00 00 00 00 00 00 00

The buffer was zeroed before calling the function, so non-zero values were written by the function. The buffer now contains some basic NAS information, such as model, serial number and firmware version, as well as some binary that at the beginning. Fun fact, the 00-10-18-00-00-00 over there is the MAC address, which is a very unusual one. I confirmed it by checking the eth0 interface on the OS and it is indeed correct. So weird.

Anyway, after checking how the code works, it seems that the NAS information is stored on 3 shared memory objects - .nas.summary, .nas.diskstat and .nas.partstat - and is created by some other functions. For the Lookup_Nas_Info, only the first one seems to be used. The function is messy, but it seems to just copy part of the summary shared memory block into the specified buffer. I don’t really know what exactly is stored in that buffer, but from that the function seems to return, we’re dealing with at least some basic NAS information.

Looking up at all exported functions, there’s a very interesting one called Create_Nas_Summary. It manipulates all those objects - even more as it calls the Create_Share_Memory function, which is just a wrapper for shm_open and nmap calls. It also sets a global variable address to 1, most likely indicating that such summary is available. Based on the code, the information is cached, which is a very nice touch from Asustor: if the data has already been loaded, it just returns the same one. Nice. The code also shows that each memory block has a specific size. This is very useful as we now know how much memory we can read from each of them to dump the whole object.

if (IS_SUMMARY_AVAILABLE == -1) {

IS_SUMMARY_AVAILABLE = 1;

_DAT_0039acf0 = 0x3a18;

// .nas.summary has 14872 bytes

retval = Create_Share_Memory(".nas.summary",0x3a18,&shared_mem_ptr);

if (-1 < retval) {

DAT_0039acec = 0xee0;

NAS_SUMMARY_PTR = shared_mem_ptr;

// .nas.summary has 3808 bytes

retval = Create_Share_Memory(".nas.diskstat",0xee0,&shared_mem_ptr);

if (-1 < retval) {

DAT_0039ace8 = 0xff0;

NAS_DISKSTAT_PTR = shared_mem_ptr;

// .nas.partstat has 4080 bytes

retval = Create_Share_Memory(".nas.partstat",0xff0,&shared_mem_ptr);

if (-1 < retval) {

NAS_PARTSTAT_PTR = shared_mem_ptr;

Based on all this information, I’ve dumped all the shared memory by simply looking at /dev/shm/:

# dd if=/dev/shm/.nas.summary of=nas.summary.bin bs=1 count=14872

14152+0 records in

14152+0 records out

14152 bytes (13.8KB) copied, 0.138858 seconds, 99.5KB/s

# dd if=/dev/shm/.nas.diskstat of=nas.diskstat.bin bs=1 count=3808

3808+0 records in

3808+0 records out

3808 bytes (3.7KB) copied, 0.025841 seconds, 143.9KB/s

# dd if=/dev/shm/.nas.partstat of=nas.partstat.bin bs=1 count=4080

4080+0 records in

4080+0 records out

4080 bytes (4.0KB) copied, 0.033768 seconds, 118.0KB/s

I actually learned a lot about shared memory and how to map file descriptors into memory. This has been incredibly interesting so far!

A quick look at the summary indicated it contains the data we already expected (model, serial number, etc), some other random information such as ethernet ports, boot disk and partition, as well as some unknown binary data. The diskstat contains disk information, but it seems to be mostly a table of sdX to drive model and serial number. Meanwhile the partstat block contains partition UUIDs - nothing fancy.

Anyway, back to Create_Nas_Summary. Since it creates the shared memory objects, I had to wonder: who calls it? A simple way was to grep the entire initramfs image:

$ grep -ri Create_Nas_Summary *

Binary file usr/sbin/nasmand matches

Binary file usr/lib/libnasman.so.0.0 matches

Aw, fuck. The same nasmand that I’ve replaced with a dummy service seems to be the only binary referencing the function we need to be called.

Sigh.

The daemon is back

We now know we need the nasmand to be called, otherwise the Create_Nas_Summary function won’t be called. Without it, the shared memory blocks won’t exist, making everything else fail. We could either replace it with a piece of code that calls it, or we can hijack the whole thing and trick it into working. Let’s try the second option, just for fun.

After around 4 hours of debugging assembly code, I’ve managed to figure out the only thing that need to be patched out: Is_System_Disk_Available. Ironic, isn’t it? If that function returns 0 instead of 1, the code will run. The daemon will both create the shared memory stuff and populate it with whatever is necessary.

Here are the 3 lines of code I needed to make it work. Yes, 3 lines.

# cat patch-system-disk.c

int _Z24Is_System_Disk_Availablev() {

return 0;

}

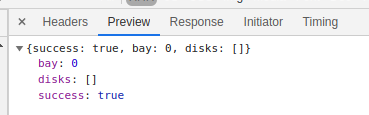

To run locally (outside the VM or the NAS), all I have to do is add the query string and a patch to bypass session validation to initial.cgi and, sure enough, it runs just fine:

# LD_PRELOAD=patch-system-disk.so ./nasmand

# QUERY_STRING="act=list-disk" LD_PRELOAD=patch-session.so ./initial.cgi

Content-type: text/plain; charset=utf-8

{ "success": true, "bay": 0, "disks":[ ]}

For patching the initramfs, simply copying the library file into it and patching the init script for nasmand works:

cp ../patch-system-disk.so ./usr/lib/patch-system-disk.so

sed -i "s|/usr/sbin/nasmand|LD_PRELOAD=/usr/lib/patch-system-disk.so /usr/sbin/nasmand|g" ./etc/init.d/S11nasmand

With those patches, the code will now run without any issues and the firmware will not fail loading the NAS information, but it still won’t find any disk.

Progress!

Another daemon returns!

Ok, this is where another funny story comes in. Remember the stormand we removed earlier? Do you wanna know exactly what it does right after probing the boot disk (or rebooting in case of failure)? Well, it finds the drives!

So, remember those two daemons I removed earlier? Yeah, turns out I need both of them to make this work.

Remember kids, don’t delete shit you don’t think you need because some day you’ll fucking need it.

The first step in making it work properly is to patch the boot disk probing. Without doing so, it’ll just fail and reboot the system, as we saw before. We learned that doing such is a bit trickier, but I’ve found that the easiest way is to keep it as dumb as possible and simply hijack the Probe_Boot_Disk to make it return the correct values. We can inject such values through the command line, just like this:

# cat /proc/cmdline

console=ttyS0,115200n8 __patch-system-disk=/dev/sdc2 __patch-system-disk-type=ext4

Then using a simple (very poorly written by myself) code that will parse the boot command line, we can get those values and return them:

int _read_cmdline_param(char *param, char *output) {

char buffer[1024] = {0};

sprintf(buffer, "cat /proc/cmdline | xargs -n1 | grep '%s=' | cut -d'=' -f2 > /tmp/cmdlineparam", param);

system(buffer);

buffer[0] = 0;

FILE *fp = fopen("/tmp/cmdlineparam", "r");

fgets(buffer, sizeof(buffer), fp);

fclose(fp);

for (int i = 0; i < sizeof(buffer); i++) {

if (buffer[i] == '\n' || buffer[i] == ' ') {

buffer[i] = 0;

break;

}

}

if (buffer[0] != 0) {

strcpy(output, buffer);

return 0;

} else {

printf("WARN: failed to read param %s from cmdline\n", param);

return -1;

}

}

int _Z20Probe_Booting_DeviceiPciS_(int param_1,char *param_2,int param_3,char *param_4) {

int retval = 0;

retval = _read_cmdline_param("__patch-system-disk", param_2);

if (retval >= 0) {

retval = _read_cmdline_param("__patch-system-disk-type", param_4);

}

return retval;

}

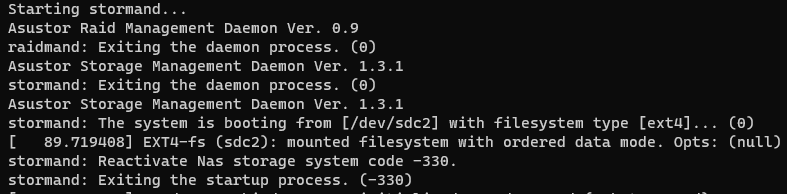

And, sure enough, that tricked stormand into finding the correct boot device:

One problem solved!

Activing the damn drives

The next step is figuring out why reactivating all drives fail with -330 as return code. We can use the error message to go through the disassembled code to try and find its origin:

Scan_All_Nas_Disks();

retval = Reactivate_Nas_Storage_System(Examine_Builtin_Services);

if (retval < 0) {

printf("stormand: Reactivate Nas storage system code %d.\n");

}

else {

printf("stormand: Reactivate %d volumes.\n");

}

So, it scans all disks first and then reactivate the NAS storage system. If we call the Scan_All_Nas_Disks on the NAS itself, it returns 5, which is correct (4 SATA disks and 1 USB (the system disk)). However, if we call it on the VM, it returns 0, indicanting that no disks were found. Aha! So let’s study how it works then:

int Scan_All_Nas_Disks(void)

{

int probe_retval;

int disk_idx;

int disk_count;

_T_NAS_DISK_STAT_ disk_stat [152];

disk_count = 0;

disk_idx = 0;

do {

probe_retval = Probe_Nas_Disk(disk_idx,disk_stat);

if (-1 < probe_retval) {

if (disk_stat[0] == (_T_NAS_DISK_STAT_)0xff) {

Update_Nas_Disk_Info(disk_idx,disk_stat);

}

else {

disk_count = disk_count + 1;

FUN_0013ca40();

}

}

disk_idx = disk_idx + 1;

} while (disk_idx != 0x1c);

return disk_count;

}

It seems to be based on a simple loop counting to 0x1c (28 in decimal), which, after reversing engineering a function called Get_Disk_Device_Name, it kinda makes sense: the NAS uses the first 26 for sda to sdz, then the rest for nvmeXn1 drives. There’s a model featured in Jeff Geerling’s YouTube channel (here and here) that supports NVMe-based storage. Cool! Why it limits to 28 is something I don’t know, though.

Anyway, inside the loop, it tries first to probe the disk by calling Probe_Nas_Disk, passing the disk index and the disk stat buffer. Let’s take a look on that function then.

ulong Probe_Nas_Disk(int disk_idx,_T_NAS_DISK_STAT_ *disk_stat)

{

uint uVar1;

ulong uVar2;

undefined8 disk_dev_name;

undefined8 local_10;

disk_dev_name = 0;

local_10 = 0;

uVar1 = Get_Disk_Device_Name(disk_idx,0x10,(char *)&disk_dev_name);

if (-1 < (int)uVar1) {

uVar2 = Probe_Nas_Disk((char *)&disk_dev_name,disk_stat);

return uVar2;

}

Reset_Nas_Disk_Info_Object(disk_stat);

return (ulong)uVar1;

}

The probing process is kinda simple, actually: it tries to get the device name (which I’ve explained before how it works) and then proceeds to call another variant of the probe function. Then it just returns whatever that function returns. I think we’re finally getting somewhere, people!

This new variant is bigger and does a bit more stuff, but nothing really complicated, actually.

ulong Probe_Nas_Disk(char *disk_dev_name,_T_NAS_DISK_STAT_ *disk_stat)

{

uint uVar1;

ulong uVar2;

long lVar3;

byte *pbVar4;

byte *pbVar5;

bool bVar6;

bool bVar7;

byte bVar8;

_T_NAS_DISK_BUS_ disk_bus [24];

bVar8 = 0;

Reset_Nas_Disk_Info_Object(disk_stat);

bVar6 = false;

bVar7 = disk_dev_name == (char *)0x0;

if (bVar7) {

uVar2 = 0xffffffea;

}

else {

lVar3 = 5;

pbVar4 = (byte *)disk_dev_name;

pbVar5 = (byte *)"/dev/";

do {

if (lVar3 == 0) break;

lVar3 = lVar3 + -1;

bVar6 = *pbVar4 < *pbVar5;

bVar7 = *pbVar4 == *pbVar5;

pbVar4 = pbVar4 + (ulong)bVar8 * -2 + 1;

pbVar5 = pbVar5 + (ulong)bVar8 * -2 + 1;

} while (bVar7);

if ((!bVar6 && !bVar7) == bVar6) {

disk_dev_name = disk_dev_name + 5;

}

uVar1 = Probe_Nas_Disk_Bus(disk_dev_name,disk_bus);

if ((int)uVar1 < 0) {

uVar2 = (ulong)uVar1;

if (uVar1 == 0xffffffed) {

uVar2 = 0x141;

}

return uVar2;

}

uVar2 = Probe_Nas_Disk(disk_bus,disk_stat);

}

return uVar2;

}

It’s bigger, but it’s not complicated at all. The reconstructed code Ghidra generates is a bit messy, but essentially what it does is extract the pure device name, without the /dev path on it - so /dev/sda will become sda. Then it passes it to Probe_Nas_Disk_Bus, which will return where the disk is located. That will then be used on yet another variant of Probe_Nas_Disk, this one receiving the bus and the stat buffer.

Calling this variant on the NAS for /dev/sda will yield 0 as the return value, while the VM will give us a -42. There are only two functions that could return this value now: Probe_Nas_Disk_Bus or Probe_Nas_Disk. Calling those functions on a simple C code on both the NAS and the VM will show us the culprit: Probe_Nas_Disk_Bus.

Progress!

Catching a Bus

The idea behind Probe_Nas_Disk_Bus seems to be very simple: figure out on which bus is the disk in. For that, it checks the /sys/block path, which has a symbolic link to the device on the /sys structure. Here’s how it looks like on the real deal:

root@realdeal:~ # ls -la /sys/block | grep sd

lrwxrwxrwx 1 root root 0 Apr 1 23:45 sda -> ../devices/pci0000:00/0000:00:13.0/ata1/host0/target0:0:0/0:0:0:0/block/sda/

lrwxrwxrwx 1 root root 0 Apr 1 23:45 sdb -> ../devices/pci0000:00/0000:00:13.0/ata2/host1/target1:0:0/1:0:0:0/block/sdb/

lrwxrwxrwx 1 root root 0 Apr 1 23:45 sdc -> ../devices/pci0000:00/0000:00:1c.1/0000:02:00.0/ata3/host2/target2:0:0/2:0:0:0/block/sdc/

lrwxrwxrwx 1 root root 0 Apr 1 23:45 sdd -> ../devices/pci0000:00/0000:00:1c.1/0000:02:00.0/ata4/host3/target3:0:0/3:0:0:0/block/sdd/

lrwxrwxrwx 1 root root 0 Apr 1 23:45 sde -> ../devices/pci0000:00/0000:00:14.0/usb1/1-3/1-3:1.0/host4/target4:0:0/4:0:0:0/block/sde/

On the VM the structure is very similar, as it emulates the required SATA connections we need.

root@AS0000T-14B7:~ # ls -la /sys/block | grep sd

lrwxrwxrwx 1 root root 0 Apr 2 22:43 sda -> ../devices/pci0000:00/0000:00:11.0/0000:02:05.0/ata1/host0/target0:0:0/0:0:0:0/block/sda/

lrwxrwxrwx 1 root root 0 Apr 2 22:43 sdb -> ../devices/pci0000:00/0000:00:11.0/0000:02:05.0/ata2/host1/target1:0:0/1:0:0:0/block/sdb/

lrwxrwxrwx 1 root root 0 Apr 2 22:43 sdc -> ../devices/pci0000:00/0000:00:11.0/0000:02:05.0/ata3/host2/target2:0:0/2:0:0:0/block/sdc/

The function will then call a secondary function called Is_Nas_Disk_Bus. This function will check in which bus is the drive attached to and return a result value for that. It receives as arguments the device path (the real one, not the link) and disk bus structure pointer. If it doesn’t match with any, it returns -0x2a, which is -42 in decimal. Aha!

int Is_Nas_Disk_Bus(char *dev_path,_T_NAS_DISK_BUS_ *disk_bus)

{

int retval;

int iVar1;

if (dev_path == (char *)0x0) {

retval = -0x16;

}

else {

retval = Is_Nas_Ata_Disk_Bus(dev_path,disk_bus);

if (retval == 0) {

retval = Is_Nas_Usb_Disk_Bus(dev_path,disk_bus);

if (retval == 0) {

retval = Is_Nas_Sas_Disk_Bus(dev_path,disk_bus);

if ((retval == 0) && (retval = Is_Nas_Nvme_Disk_Bus(dev_path,disk_bus), retval == 0)) {

retval = -0x2a;

}

}

else {

if (((disk_bus[2] == (_T_NAS_DISK_BUS_)0x2) && (((byte)disk_bus[8] & 0xc) == 0)) &&

(iVar1 = Is_Nas_External_Usb_Disk_Disabled(), iVar1 == 1)) {

*(ushort *)(disk_bus + 6) = *(ushort *)(disk_bus + 6) | 0x100;

}

}

}

}

return retval;

}

If we manually call Is_Nas_Ata_Disk_Bus on the path used by sda on the VM (/sys/devices/pci0000:00/0000:00:11.0/0000:02:05.0/ata1/host0/target0:0:0/0:0:0:0/block/sda), it returns 0, which is a bad result here, as it’ll try find the disk on other buses. So the issue is on that function itself. Btw, that path will be use a lot from now on, so wherever I refer to a “device path”, “our case” or something similar, I mean that string.

The Is_Nas_Ata_Disk_Bus is a messy bastard as well, but I think I managed to figure it out. Let’s go through each part of it checking what is going on.

The first thing it does is to verify the path you passed to it:

if (dev_path == (char *)0x0) {

return -0x16;

}

pcVar3 = strstr(dev_path,"/ata");

if (pcVar3 == (char *)0x0) {

return 0;

}

pcVar3 = strstr(pcVar3,"/target");

if (pcVar3 == (char *)0x0) {

return 0;

}

pcVar4 = strstr(pcVar3,"/block/sd");

if (pcVar4 == (char *)0x0) {

return 0;

}

Or translating to english:

- If the device path is

null, returns-0x16. - If the device path does not contain the string

/ata, returns0. - If the device path does not contain the string

/target, returns0. - If the device path does not contain the string

/block/sd, returns0.

Essentially the function is just verifying you passed a valid ATA device path to it. On our test path you can see we have all the required strings:

`` /sys/(…)/0000:02:05.0/ata1/host0/target0:0:0/0:0:0:0/block/sda ^^^^ ^^^^^^^ ^^^^^^^^^

At this point, `pcVar4` is a pointer to part the `/target(...)` part of the string, and `pcVar5` to the `/block/sda`. **Keep that in mind.**

It then grabs some information it needs, such as the device ID and NAS information. Finally, it parses a string using the information it got from the device ID:

```c

retval = Get_Disk_Device_Id(pcVar4 + 7,&out_dev_id_1,&out_dev_id_2);

if (retval < 0) {

return 0;

}

retval = Lookup_Nas_Info(&props);

if (retval < 0) {

return retval;

}

retval = sscanf(pcVar3 + 7,"%d:%d:%d",&target_1,&target_2,target_3);

if (retval != 3) {

return 0;

}

Or again in english:

- Get the disk device ID for block device. If that fails, return

0. - Get the NAS information. If that fails, return the return value of that call.

- Parses the target in the path, extracting each bus index from it.

In our case, our pcVar5 + 7 is sda and calling Get_Disk_Device_Id on both the NAS and VM yields the correct results regarding the device ID itself. The NAS information lookup won’t fail as we fixed that before - plus our return value is 0, not a negative number. Finally, the sscanf on pcVar4 + 7 (which is 0:0:0 in our case) will extract all digits from our bus path: all zeros. There’s no reason for that to fail, so we’ll just assume it worked.

The next step is to read the device class from a file called class on the /sys structure. It builds the string from the first number on the target (target_1) incremented by 1.

snprintf((char *)(props.field_0xac + 4),0x30,"/sys/class/ata_device/dev%d.0/class", (ulong)(target_1 + 1));

retval = Simple_Read_File((char *)(props.field_0xac + 4),0,device_class,0x10);

if (retval < 3) {

return -0x13;

}

if ((retval < 0x11) && (device_class[retval + -1] == '\n')) {

device_class[retval + -1] = '\0';

}

The Simple_Read_File will return the number of bytes read. If it returns less than 3, the function fails with -0x13 as return code. Otherwise, it’ll check if it’s less than 17 bytes (0x11), and if the last character is \n (line break). If that happens, it’ll replace that some line break with a null terminator.

Based on real hardware and educated guesses, the 3 bytes it expects seems to be ata, which is exactly what both our systems return. This value, however, will be used only later on, when it is compared to pmp (port multiplier). Weird.

Anyway, the next step is interesting: it checks where is the drive attached to. It first checks the SATA ports, then eSATA and finally M.2 (.. NVMe?). If all of those fail, it exits with return code 0. Cool.

retval = props.sata_count - 1;

if (retval == -1) {

retval = props.esata_count - 1;

if (retval != -1) {

// (attached over eSATA, removed for simplicity)

}

if (props.m2_count == 0) {

return 0;

}

}

But here’s the catch: our sata_count and esata_count are both 0. Really, zero! I have no idea why (yet), but it is. In that case, the code will eventually fail and return a zero, indicating the disk is not over SATA - which is obviously wrong.

So we need now to figure out why the fuck it is setting the SATA disk/port count as zero.

Going back, way back

Let’s take a step back here. Or maybe many, many steps.

Remember the NAS summary, properties and so on? I’ve found their offsets in the shared memory object, as well as managed to decode some of their meanings. That’s why in the code above we knew what each offset in the structure was: I’ve been decoding the damn thing since early this morning. Anyway, in the shared object we have both the NAS properties and NAS stats, each one in their respective offsets. The offsets were simply found on a function called Dump_Nas_Summary - yeah, not even joking:

void Dump_Nas_Summary(void)

{

// (...)

Dump_Nas_Prop((_T_NAS_PROP_ *)(NAS_SUMMARY_PTR + 0xc));

Dump_Nas_Stat((_T_NAS_STAT_ *)(NAS_SUMMARY_PTR + 0xb8));

// (...)

}

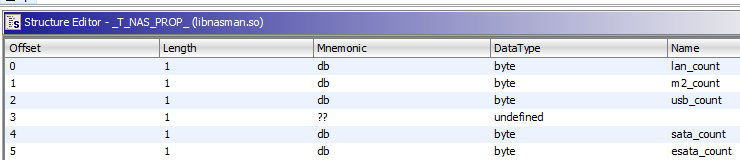

Those functions, Dump_Nas_Prop and Dump_Nas_Stat helped a lot in decoding the _T_NAS_PROP_ and _T_NAS_STAT_ structures, to the point that I’m confident in saying that the firmware is not detecting any SATA disk or ports at all. This would explain why it isn’t be able to initialize any disk or anything. If we look at the data structure for the NAS properties, we see this:

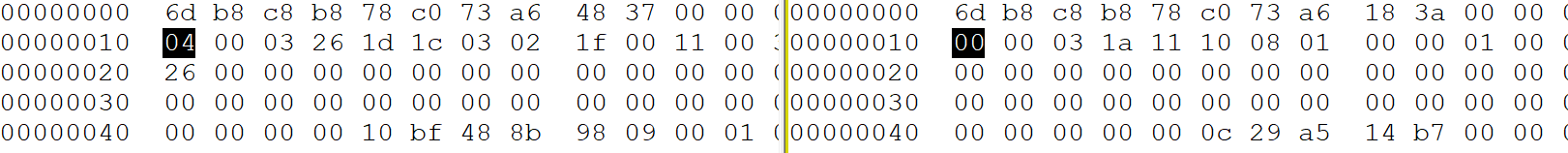

If check the summaries dumps side-by-side we can clearly see they have different properties (which is expected), but also a difference SATA disk/port count. The offset for that is 0x0C + 0x04, which is 0x10:

So, no drives. The kernel does find them, but unfortunately the firmware does not. Ok, so maybe we found our issue. Now we just need to make that 00 over there become a 04. That shouldn’t too hard… right?

The CPU Patch

Nothing is easy.

By looking for many hours at the code, I’ve managed to figure a few things out. First of all, it won’t work out of the box. You see, once I started looking deep into the Probe_Nas_Attribute function (which is responsible for initializing and loading the NAS attributes (duh)) and Probe_Nas_Pch (which is responsible for… something?), I’ve realized that some of it is hard-coded to the expected hardware. For example, the CPU.

You see, the NAS expects a few things to work, and one of the them is a very specific CPU - or at least one of the supported ones. One way it checks for such specific detail is through 2 functions: Scan_Pci_Device and Find_Pci_Device. The first will return a list of PCI devices installed in the system, while the second will iterate it against another list - the one with hardcoded IDs. If you call Probe_Nas_Pch (which will do this PCI device check) on the NAS, you’ll get a value of 3 as a result, while in the VM it will give you a negative value, indicating an error. After playing with it for a while, I’ve managed to figure out what it was finding, and it was defined by a initialization function. This is the function - take a look at index 3.

void _PopulateAcceptedDevices(void)

{

// Index 0

_T_PCI_DEVICE_ID__0039a3f8.class = 0x601;

_T_PCI_DEVICE_ID__0039a3f8.vendor_id = 0x8086;

_T_PCI_DEVICE_ID__0039a3f8.device_id = 0x3a16;

_T_PCI_DEVICE_ID__0039a3f8.subsystem_device = 0x3a16;

// Index 1

_T_PCI_DEVICE_ID__0039a420.class = 0x601;

_T_PCI_DEVICE_ID__0039a420.vendor_id = 0x8086;

_T_PCI_DEVICE_ID__0039a420.device_id = 0x8c56;

_T_PCI_DEVICE_ID__0039a420.subsystem_device = 0x7270;

// Index 2

_T_PCI_DEVICE_ID__0039a448.class = 0x601;

_T_PCI_DEVICE_ID__0039a448.vendor_id = 0x8086;

_T_PCI_DEVICE_ID__0039a448.device_id = 0xf1c;

_T_PCI_DEVICE_ID__0039a448.subsystem_device = 0x7270;

// Index 3

_T_PCI_DEVICE_ID__0039a470.class = 0x601;

_T_PCI_DEVICE_ID__0039a470.vendor_id = 0x8086;

_T_PCI_DEVICE_ID__0039a470.device_id = 0x229c;

_T_PCI_DEVICE_ID__0039a470.subsystem_device = 0x7270;

// All the other ones

// (...)

// End of the list.

_DAT_0039a538 = 0xffff;

_DAT_0039a53a = 0xffff;

_DAT_0039a53c = 0xffff;

_DAT_0039a53e = 0xffff;

_DAT_0039a560 = 0xffff;

_DAT_0039a562 = 0xffff;

_DAT_0039a564 = 0xffff;

_DAT_0039a566 = 0xffff;

return;

}

By Googling up those values, I’ve managed to find some information, which matches the CPU on the real device.

Hardware Class: bridge

Model: "Intel Atom/Celeron/Pentium Processor x5-E8000/J3xxx/N3xxx Series PCU"

Vendor: pci 0x8086 "Intel Corporation"

Device: pci 0x229c "Atom/Celeron/Pentium Processor x5-E8000/J3xxx/N3xxx Series PCU"

SubVendor: pci 0x8086 "Intel Corporation"

SubDevice: pci 0x7270

Ok, progress! How do we deal with it? Well, it’s very simple: we find the one in the VM and patch it into the library! By looking up the devices we have on the VM, I’ve managed the find the correct one. All I had to do was to simply look for the class 0x0601 and a single device showed up:

root@AS0000T-14B7:/sys/bus/pci/devices # grep 0x0601 **/class

0000:00:07.0/class:0x060100

root@AS0000T-14B7:/sys/bus/pci/devices # cat 0000\:00\:07.0/vendor

0x8086

root@AS0000T-14B7:/sys/bus/pci/devices # cat 0000\:00\:07.0/device

0x7110

root@AS0000T-14B7:/sys/bus/pci/devices # cat 0000\:00\:07.0/subsystem_device

0x1976

Now, the patch. The instructions we want to change are the following, as they simply set the constants in their respective addresses.

0012456c 66 c7 05 MOV word ptr [_T_PCI_DEVICE_ID__0039a470],0x601 =

fb 5e 27

00 01 06

00124575 66 c7 05 MOV word ptr [_T_PCI_DEVICE_ID__0039a470.vendor_id = 0x8086

f4 5e 27

00 86 80

0012457e 66 c7 05 MOV word ptr [_T_PCI_DEVICE_ID__0039a470.device_id = 0x229c

ed 5e 27

00 9c 22

00124587 66 c7 05 MOV word ptr [_T_PCI_DEVICE_ID__0039a470.subsystem = 0x7270

e6 5e 27

00 70 72

A simple find and replace for binary strings should do the job. Replacing 66c705ed5e27009c22 for 66c705ed5e27001071 will change the 0x229c for 0x7110, and 66c705e65e27007072 for 66c705e65e27007619 will change 0x7270 for 0x1976. Sure, I could have used those 0xffff at the end to patch my CPU, but that’s for a next day. And, sure enough, after patching those two instructions, the Probe_Nas_Pch returned 3, the same index as the NAS itself. This allows some other calls to carry on, which is great for initializing the device.

It didn’t work.

The “Fun” Patch

Since nothing is easy, that wasn’t enough. Remember the Probe_Nas_Attribute? It calls an inline/unnamed/wtf function - FUN_00127a90 - which is very hard to debug. It does a bunch of checks which we’ll have to manually patch. Fun fact, if we follow the code on Ghidra we’ll end up on another function, which is very similar to this one but not the same. Weird. Nevertheless, I got this one by literally seeing the instruction bytes on GDB and searching them on Ghidra. Go figure.

Anyway, the first thing it does is check the CPU by looking at its name. Yep., the name. No idea why it does that, but I suppose it is to distinguish between multiple models automatically (we’ll see more why later). There are multiple ways we can patch this out of the code, but I’ve decided for the simplest one: patching the condition by replacing JZ with JMP. You see, the way the code works is by calling the check function and comparing its result (on register EAX) with 0x1. If it matches, then it jumps away (to inside the if block). If we replace the conditional jump with an inconditional one, we can easily bypass the CPU check.

// Before patch

00127d7a e8 b1 cb CALL FUN_00124930

ff ff

00127d7f 83 f8 01 CMP EAX,0x1

00127d82 0f 84 78 JZ LAB_00128100

03 00 00

// After patch

00127d7a e8 b1 cb CALL FUN_00124930

ff ff

00127d7f 83 f8 01 CMP EAX,0x1

00127d82 48 e9 78 JZ LAB_00128100

03 00 00

The second thing it does is look for a Broadcom Ethernet Card. Yep. It calls Find_Pci_Device passing a memory address of a structure holding information about the device. It is looking for a 0x14E4 vendor and 0x16B1 device, or a NetLink BCM57781 Gigabit Ethernet PCIe. Fair enough, my NAS has one of those. The VM itself runs on a Intel® PRO/1000, so we need to patch that in. That shouldn’t be too hard: a simple byte replace does the job here. We’re looking for the 0002 e414 b116 ffff string, and we’ll replace it with 0002 8680 0f10 ffff.

The third thing it does is call FUN_00124f90, which is a very interesting function. It reads the /proc/cmdline and looks for bootpart= and gpt= parameters. On our case, it should get the bootpart=2, which will make it call Probe_Usb_Disk_Path. The first part is easily hackeable: we have control of the boot command line, so it’s just an easy change. The second part, however, requires the same patch as we did before with the Probe_Booting_Device function. In fact, we can use the same code from it, but just remove the partition number, as the FUN_00124f90 will add it anyway. In the end, we have to patch 3 things:

- Boot command line: add a simple

bootpart=1. - Replace the

bootpart=2string in the code withbootpart=1. - Replace concatenating

2with1on the boot device at the end of the function.

Finally, the fourth (and hopefully?) last thing is another call to Find_Pci_Device, but now looking for an ASMedia ASM1062 SATA controller. This is another easy fix, we can simply look what controller we have on the VM and replace the bytes for it. The device ID it looks for is 0601 211b 1206 ffff (class + vendor + device id + terminator, all reversed (0601 means 0106)). The replacing string is 0601 ad15 e007 ffff, which is a VMware SATA Controller. Cool.

And, sure enough: if we preload the patch-system-disk library we’ve been working on, we can finally have a working test code:

root@AS6104R-0000:~ # LD_PRELOAD=/usr/lib/patch-system-disk.so ./attribute

Nas Attributes:

Model: [AS6104R] (AS [61] Series, PCH: [3], Chassis: [1], Rever: [1])

Version: [3.5.4.RE11], Boot From: [/dev/sda1, ext4] (bus: 0x130), HP: [1], S3: [1], KLS: [1], LCM: [0], MA: [1], OB: [0], WW: [0]

Serial Number: [AX1108014IC0006], Host ID: [00-00-00-00-00-00]

NAS with [4] LAN, [4] USB, [4] SATA, [0] ESATA

Lan Order [4]: 3, 2, 0, 1,

Usb Order [4]: 0x140/230, 0x150/220, 0x110/240, 0x120/210,

Sata Order [4]: 3, 2, 0, 1,

M.2 Order [0]:

ESata Order [0]:

Finally.

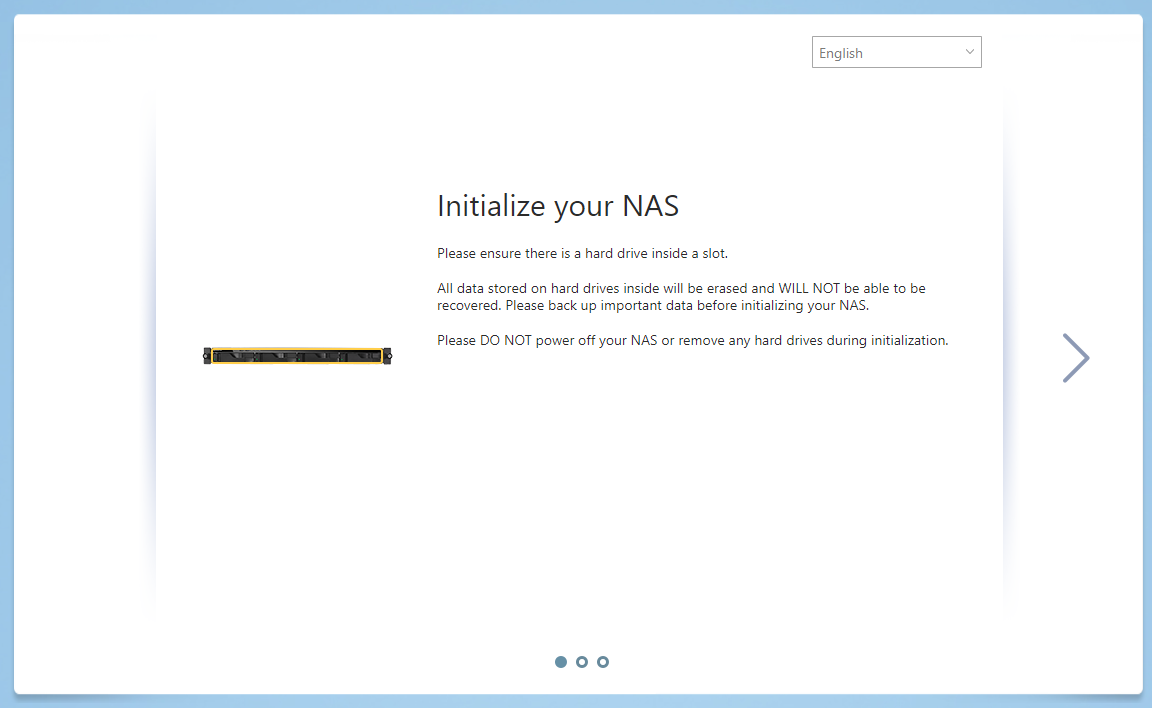

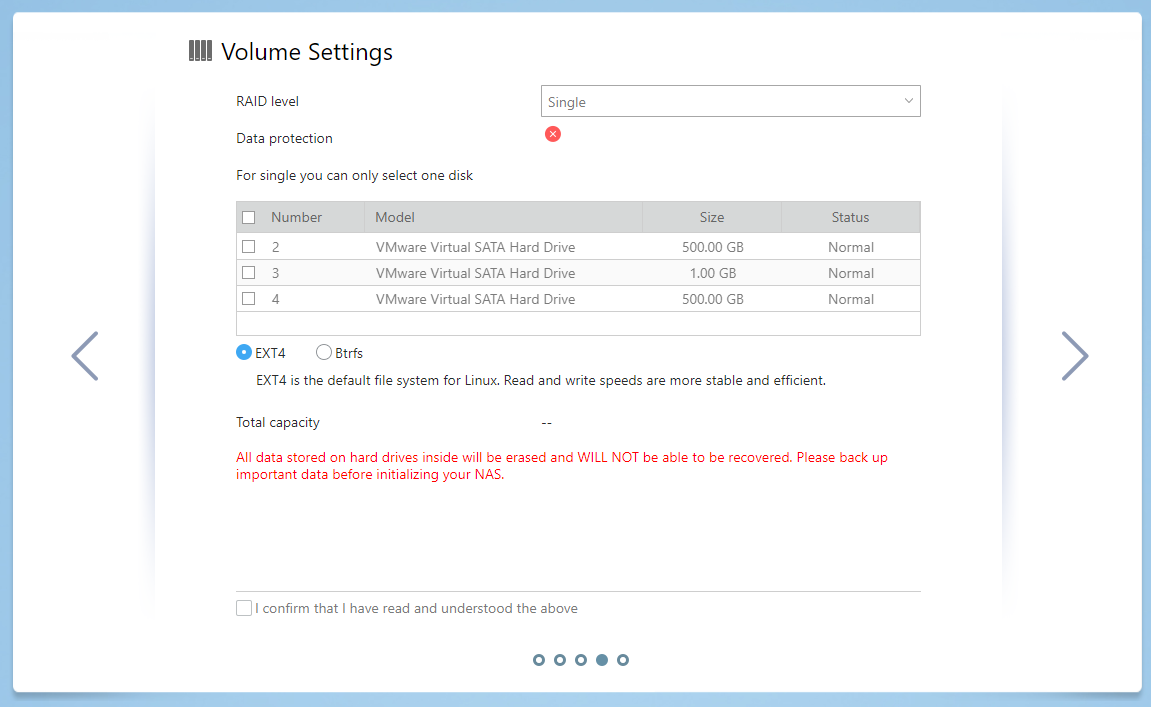

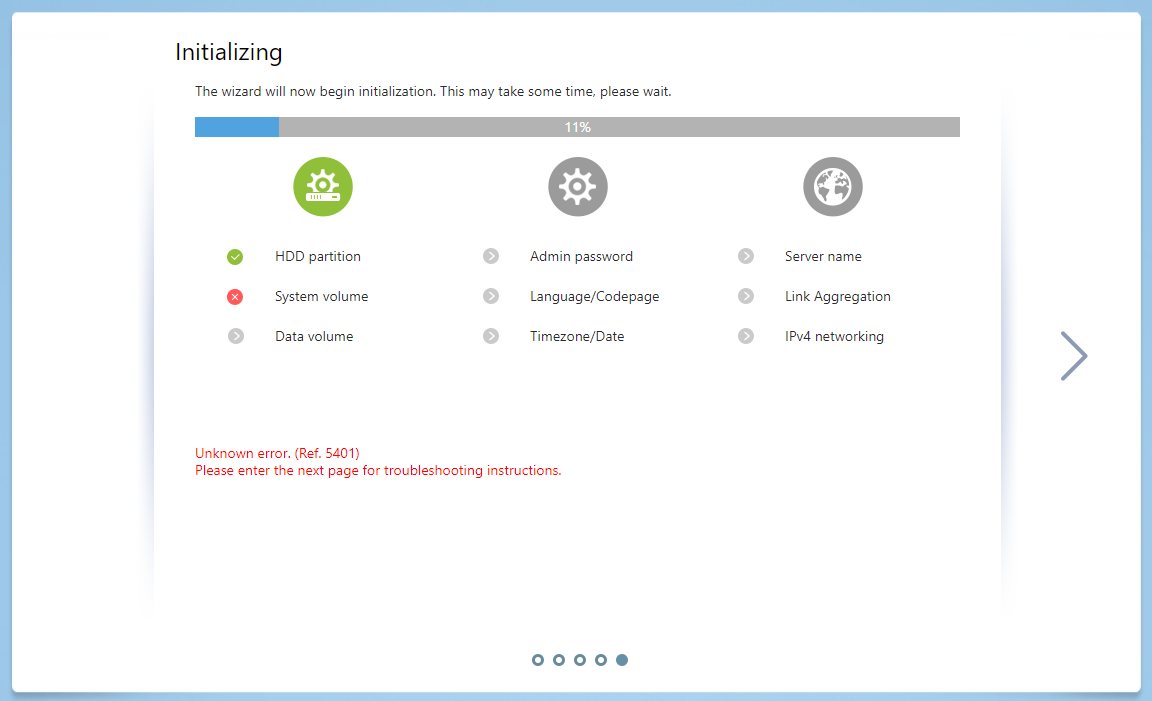

The Installation

The installation process is very simple. There’s a wizard which will help you through the process, making a few simple questions. There’s a custom mode that will allow you to define the RAID level and everything. Ah, and obviously now it see the disks, as the model it thinks it is actually has SATA ports. Hehe.

But, since we hacked the shit out of this firmware, it won’t install. It kinda makes sense, actually, as it uses different binaries for installation (such as fwupdate) and I’m patching only a few daemons and libraries. The next step is figure out what exactly is being called and with what parameters, and then start reverse engineering from there as well. Hopefully that will allow me to install the OS.

Hopefully. See you in the next part!