Bypassing anti-cheat software (part 2)

By Ricardo

Ok, it has been a while since the first part, I know. And if you haven’t read it, please check it out - it will make way more sense! Spoiler: on this part 2 I’ll do the next iteration of this project: improving the emulated devices and the algorithm. Here’s the part 1 if you haven’t read it yet:

Everything I’ve talked so far is not new. People have been talking about this for a while, including myself on previous talks in the last years. But recently I decided to revisit this project as a lot has change: we got new games, new ideas and more powerful hardware - way more powerful hardware. I got some interesting ideas since then, and I think can get this bot better (and faster). But for that, we need to take a step back and discuss this a bit and explore some cool and interesting ideas.

Disclaimer

Waaaaaaaaaait! You thought I had forgotten it, right? Yeah, no. Here we go:

I do not endorse cheating. Everything you see here is a proof of concept for the sole purpose of research. Any content here provided was createad on a controlled environment and is available for documentation/research only. Everything is provided “AS IS” and no support is or ever will be given. Oh, and I’m not responsible if you get banned from games, get your house raided by federal authorities, or get yourself in any kind of trouble because of this.

Did you get all of that? Good, now we can talk.

Let’s talk about this

Disclaimer: this specific part may get a bit philosophical, so feel free to skip if you only want the more juicy techinical details.

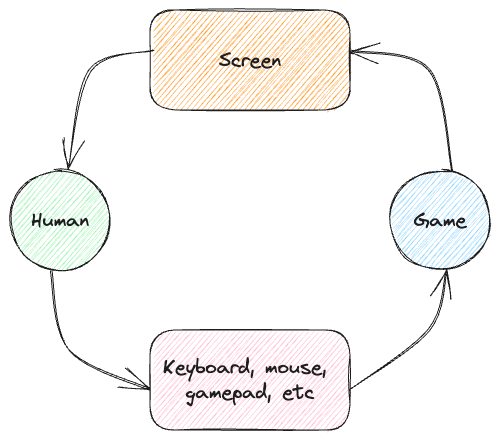

Let’s tackle this problem from another perspective. If we remove everything from the equation, we only have two things: the game and the player. From the game’s point of view, the player is an unknown variable: it can’t predict what the user is doing. It is, in fact, a kind of a black box:

- The game receives player instructions, operates and outputs data in the screen.

- The player “receives” (more like sees) game output from the screen, operates (thinks), and outputs the next instructions through a peripheral attached to the computer.

Basically this:

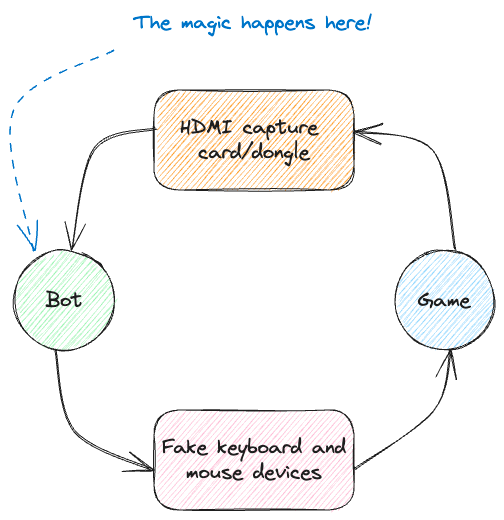

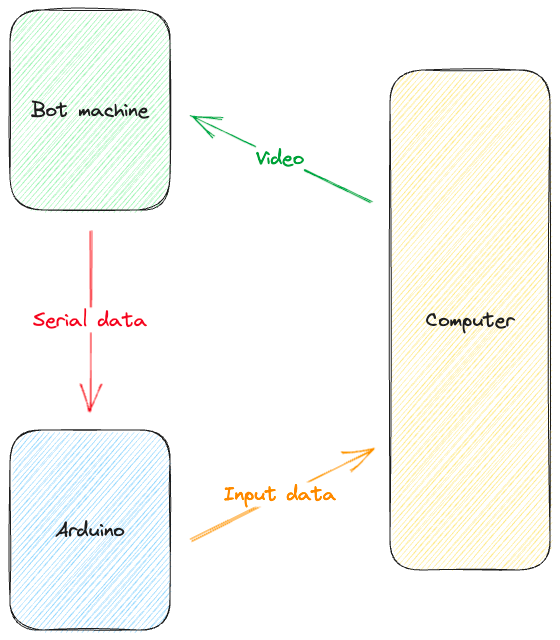

But… what if we replace the human with a bot? Instead of eyes, we capture video through a HDMI capture card, and instead of a mouse and keyboard, a device we control. It would be something like this:

On part 1, we had a script that was capturing the video and processing it locally (which may be useful sometimes, don’t get me wrong). The inputs would still be sent through an external device to bypass the anti-cheat software blocking macro tools. However, this can easily be detected, as often players don’t really have something running OpenCV at the same time as a game… right?

This time we have a better approach to this: nothing runs on the game host computer, except the game itself. From the anti-cheat software perspective, nothing is really weird on this machine, as it only has too different things showing up:

- An extra monitor (provided by the HDMI capture card)

- A extra pair of keyboard and mouse

Mind you this is 100% normal in the gaming community. People use extra displays, capture cards, extra peripherals and everything and that is totally fine. I’ve seen setups with multiple input devices just because of those pesky FPS games. Thanks streamers for making this normal!

With this approach, things change a bit on how it works:

- Game runs on the host as usual, bot code runs on a second machine.

- Video output of the game host is sent as video capture to the bot machine.

- The bot machine processes the frame and decides what to do.

- The bot machine sends data to a microcontroller emulating a USB mouse.

- The game host detects the mouse movement just like any other mouse and reacts to it.

In essence, we just replaced our human with a robot.

Trying to make it happen

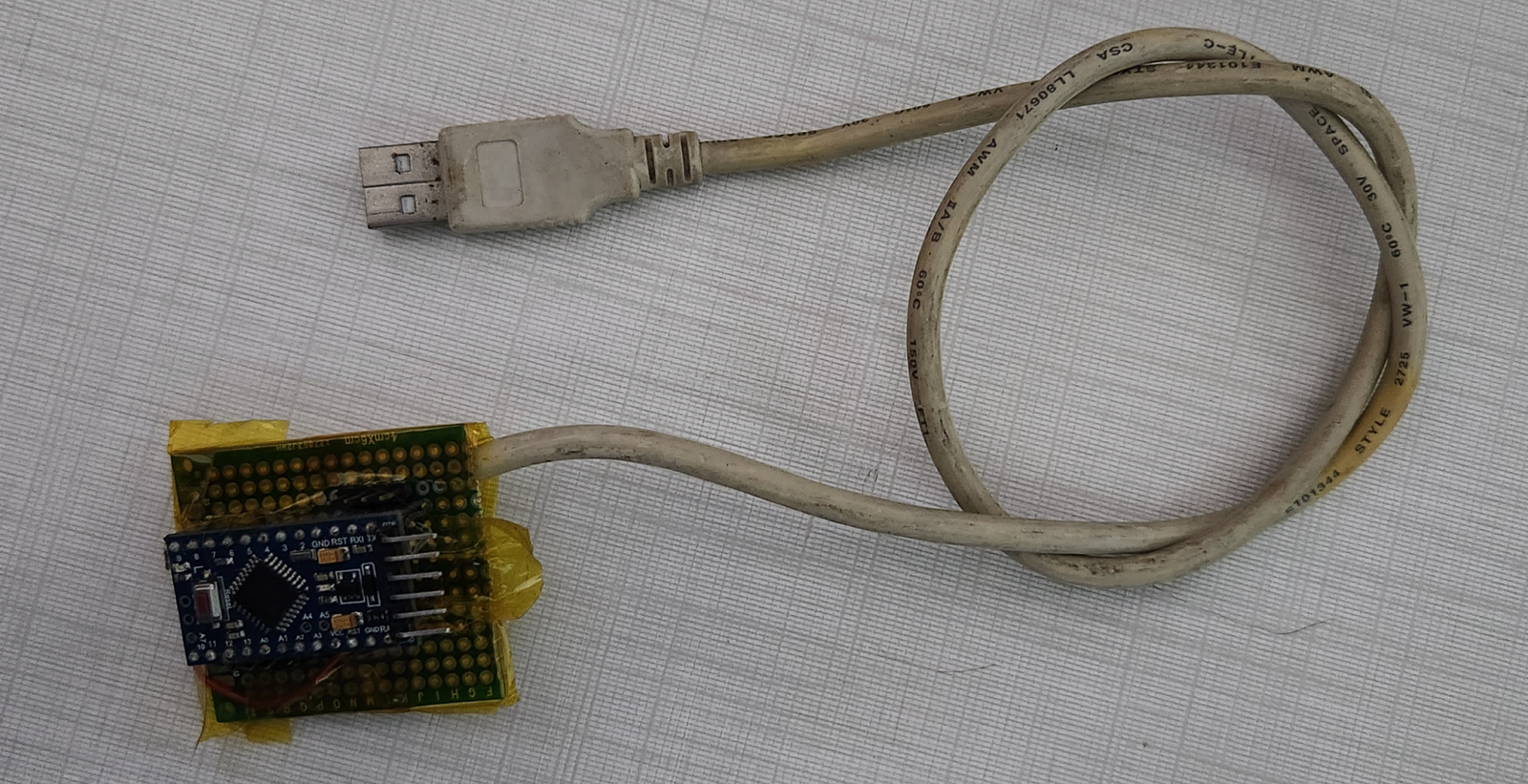

There were multiple attempts to get this done. The first one was with an Arduino. Well, technically just the ATmega328P, the AVR microcontroller behind it, as I was not using their framework. But yeah, it worked like this:

For this to work, I used the magical V-USB. This is a really cool library that allows you to bitbang USB 1.1, and it allowed me to implement an emulated mouse - well emulated from the Arduino perspective, as the computer sees it as an actual mouse. I picked the same approach as the previous version of the project though: a tablet-like input device. This was to make my life easier. If you are interested, here’s the full USB descriptor:

0x05, 0x01, // Usage Page (Generic Desktop Ctrls)

0x09, 0x02, // Usage (Mouse)

0xA1, 0x01, // Collection (Application)

0x09, 0x01, // Usage (Pointer)

0xA1, 0x00, // Collection (Physical)

0x05, 0x09, // Usage Page (Button)

0x19, 0x01, // Usage Minimum (0x01)

0x29, 0x03, // Usage Maximum (0x03)

0x15, 0x00, // Logical Minimum (0)

0x25, 0x01, // Logical Maximum (1)

0x95, 0x03, // Report Count (3)

0x75, 0x01, // Report Size (1)

0x81, 0x02, // Input (Data,Var,Abs,No Wrap,Linear,Preferred State,No Null Position)

0x95, 0x01, // Report Count (1)

0x75, 0x05, // Report Size (5)

0x81, 0x03, // Input (Const,Var,Abs,No Wrap,Linear,Preferred State,No Null Position)

0x05, 0x01, // Usage Page (Generic Desktop Ctrls)

0x09, 0x30, // Usage (X)

0x09, 0x31, // Usage (Y)

0x16, 0x01, 0x80, // Logical Minimum (-32767)

0x26, 0xFF, 0x7F, // Logical Maximum (32767)

0x75, 0x10, // Report Size (16)

0x95, 0x02, // Report Count (2)

0x81, 0x02, // Input (Data,Var,Abs,No Wrap,Linear,Preferred State,No Null Position)

0xC0, // End Collection

0xC0, // End Collection

To be able to control the mouse, I’ve also programmed the microcontroller to read a few bytes from the serial port every now and then. These bytes would then be written to the USB port on the next USB device report. Since the X and Y are 16 bits, 5 bytes are enough for this:

Byte 0: xxxxxLMR

Byte 1: least significant bits of X

Byte 2: most significant bits of X

Byte 3: least significant bits of Y

Byte 4: most significant bits of Y

That… didn’t go as well as I expected. Don’t get me wrong, it worked, but this approach had some a major drawback: performance. It was painfully slow. Not sure why though. I think it was a mix of UART and USB problems, as well as just crappy coding from my part.

Plus, it was.. well, kinda ugly:

But honestly I think the biggest issue was that it was just not good. It was flaky and gave me more trouble that I considered worth it. For that reason, I’m not even going to bother releasing the code for it - if you really want it, ping me and I’ll send you. But it’s bad.

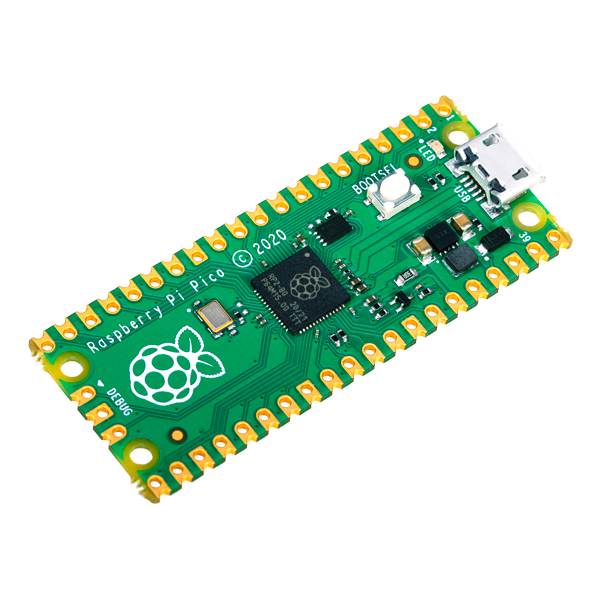

Anyway, with all that in mind, I went ahead and ordered the next interesting microcontroller: the Raspberry Pi Pico.

Pico and FANCU

The Raspberry Pi Pico is a way more power microcontroller, and has plenty of features that can be used to improve this project. It has dedicated USB and Wifi support - plus it supports multiple cores (not that we actually use them). On top of it, I’ve created the a new project: FANCU.

The Fake Network Controlled User (FANCU - catchy, no?) is a small project to “emulate user behavior” through HID devices. It consists of two parts:

- A Raspberry Pi Pico code that will listen for UDP packets indicating what to do with the keyboard and mouse.

- Some brain (aka bot) code that will decide what the Pico needs to do.

Since you are going to ask anyway, here’s the code before we even begin:

Let me go through every single part of the project.

Pico USB

You see, since this is a pretty powerful microcontroller (and suprisenly cheap!), it also has actual USB support. This means we can use a proper USB stack: TinyUSB. Not only that, there are examples of how to usb it on Pico for exactly what I need: emulate a HID device. The USB descriptor (which I did not bother to change from TinyUSB’s code) is a bit different this time:

- X and Y are signed 8bit integers

- No more tablet mode, we’re going full mouse emulation and relative values!

I’ve decided to keep it this way as I would like to emulate a more realistic mouse instead of a tablet. This obviously brings back the problem of the mouse being way harder to control, but more on that later.

Oh yeah, and there’s the descriptor for it, but using the TinyUSB macros (I’m lazy ok):

HID_USAGE_PAGE ( HID_USAGE_PAGE_DESKTOP ) ,\

HID_USAGE ( HID_USAGE_DESKTOP_MOUSE ) ,\

HID_COLLECTION ( HID_COLLECTION_APPLICATION ) ,\

HID_USAGE ( HID_USAGE_DESKTOP_POINTER ) ,\

HID_COLLECTION ( HID_COLLECTION_PHYSICAL ) ,\

HID_USAGE_PAGE ( HID_USAGE_PAGE_BUTTON ) ,\

HID_USAGE_MIN ( 1 ) ,\

HID_USAGE_MAX ( 5 ) ,\

HID_LOGICAL_MIN ( 0 ) ,\

HID_LOGICAL_MAX ( 1 ) ,\

/* Left, Right, Middle, Backward, Forward buttons */ \

HID_REPORT_COUNT( 5 ) ,\

HID_REPORT_SIZE ( 1 ) ,\

HID_INPUT ( HID_DATA | HID_VARIABLE | HID_ABSOLUTE ) ,\

/* 3 bit padding */ \

HID_REPORT_COUNT( 1 ) ,\

HID_REPORT_SIZE ( 3 ) ,\

HID_INPUT ( HID_CONSTANT ) ,\

HID_USAGE_PAGE ( HID_USAGE_PAGE_DESKTOP ) ,\

/* X, Y position [-127, 127] */ \

HID_USAGE ( HID_USAGE_DESKTOP_X ) ,\

HID_USAGE ( HID_USAGE_DESKTOP_Y ) ,\

HID_LOGICAL_MIN ( 0x81 ) ,\

HID_LOGICAL_MAX ( 0x7f ) ,\

HID_REPORT_COUNT( 2 ) ,\

HID_REPORT_SIZE ( 8 ) ,\

HID_INPUT ( HID_DATA | HID_VARIABLE | HID_RELATIVE ) ,\

/* Verital wheel scroll [-127, 127] */ \

HID_USAGE ( HID_USAGE_DESKTOP_WHEEL ) ,\

HID_LOGICAL_MIN ( 0x81 ) ,\

HID_LOGICAL_MAX ( 0x7f ) ,\

HID_REPORT_COUNT( 1 ) ,\

HID_REPORT_SIZE ( 8 ) ,\

HID_INPUT ( HID_DATA | HID_VARIABLE | HID_RELATIVE ) ,\

HID_USAGE_PAGE ( HID_USAGE_PAGE_CONSUMER ), \

/* Horizontal wheel scroll [-127, 127] */ \

HID_USAGE_N ( HID_USAGE_CONSUMER_AC_PAN, 2 ), \

HID_LOGICAL_MIN ( 0x81 ), \

HID_LOGICAL_MAX ( 0x7f ), \

HID_REPORT_COUNT( 1 ), \

HID_REPORT_SIZE ( 8 ), \

HID_INPUT ( HID_DATA | HID_VARIABLE | HID_RELATIVE ), \

HID_COLLECTION_END , \

HID_COLLECTION_END

Pico UDP

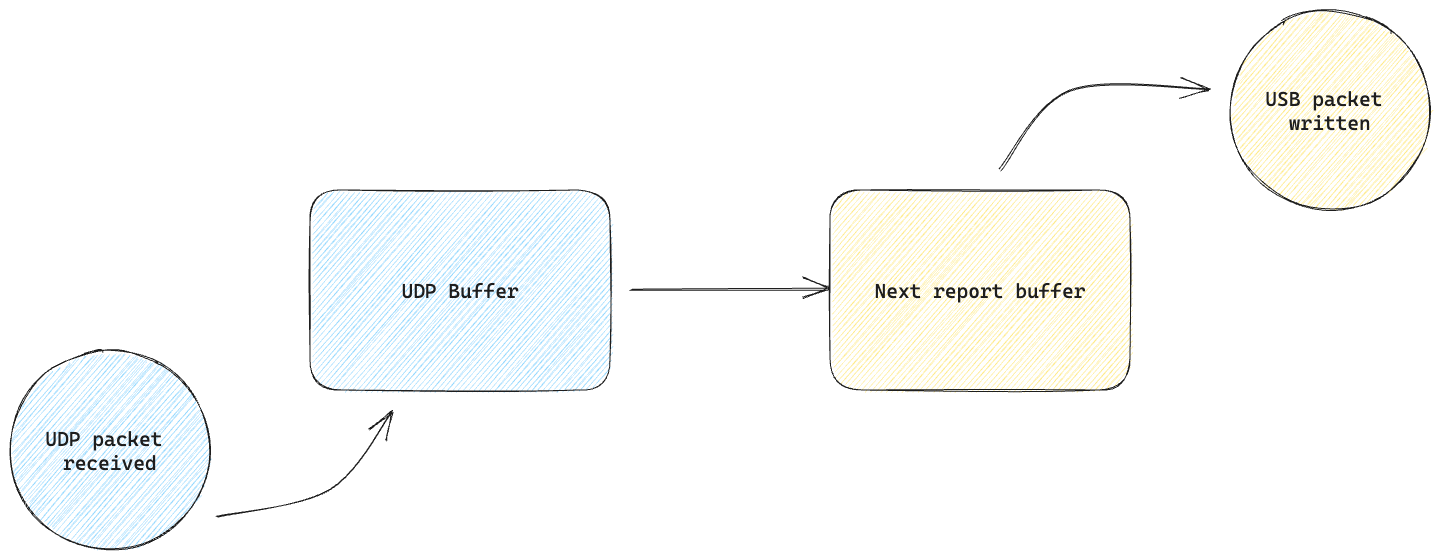

Together with the USB code, I’ve also added some wifi stuff and UDP support, so that it listens to UDP packets all the time. Once those packets arrive, we can just write them directly (almost) to the USB report.

You might be wondering why not processing it before writing to the USB report. Well, I want the data to be very simple and fast. If I can avoid any kind of parsing, this improves the latency by.. well, reducing the amount of processing time for each packet. Simple as that. Plus the packets are not that complex anyway, no need for any kind of encapsulation, headers, or even parsing.

A packet consists of 16 bytes and contains both the mouse data and some unused chunks, which can be later used for keyboard control. It is structured as following:

0000 0xFF (marker)

0001 Mouse buttons (using the last 3 bits: xxxxxRML)

0002 Mouse delta X (signed 8bit integer)

0003 Mouse delta Y (signed 8bit integer)

0004 Unused

0005 Unused

0006 Unused

0007 Unused

0008 Unused

0009 Unused

000A Unused

000B Unused

000C Unused

000D Unused

000E Unused

The unused bytes can be used to define keyboard behavior in the feature. Technically we wouldn’t need much for that, just a few bytes for the scan codes, key statuses and that’s it. The remaining bytes should be more than enough for that, but I’ve decided to keep this implementation out of this part for now (spoiler: part 3 will explain why!).

Putting it all together!

The whole code runs within a loop those the following:

- Wifi housekeeping

- USB housekeeping

- HID housekeeping (send the next USB report if available)

- Copy the UDP buffer into the next USB report buffer

- Print the report for debugging purposes

The UDP buffer is written by an interrupt that copies the received UDP data into it. Access to such buffer is controlled by a mutex, as step 4 (the copy) also needs to access it. Step 3 (send report) is responsible for sending the received data to the host device - which is done on the next loop iteration.

There is a bug. Step 3 will send a single report (keyboard first), and once the host says it’s all good, the code will send the next one. In the meanwhile, that buffer might have changed. I’m not worried about this at the moment due to the low data throughput, but on fast-paced devices it might be necessary to introduce a queue.

Once we put it all together and remove all of the boilerplate code, it is basically as simple as this:

Yep, not even joking. It’s really simple.

Brains of operation

The brains of operation are kinda weird. It’s a hacked-together Golang code that I’ve been writing over the course of a few weekends and vacation days. You have to be aware that the code is not designed to be pretty or anything, it is written to work. Not work well, just work. Lots of it was through ChatGPT anyway.

The algorithm behind it is quite simple actually:

- Find the cursor. We need to know our base position for everything.

- Find the relevant objects (our targets, such as mobs, enemies, etc).

- Do we have a current selected target? If not, pick the closest one.

- Move our cursor a bit into the direction of the current selected target.

- Are we there? If yes, click on it and “unpick” the target (so we can find a new one).

- Goto to 1.

So basically the code consists by finding a target, clicking on it and moving on. Note that this strategy does not work with all games - Ragnarok being one of them. This algorithm was different when I was working with Ragnarok, and thus it might have terrible performance on it - or not, only testing will tell!

All that said, we need to grab the image somehow. Instead of running the code on the target machine as we were doing before, this now runs on a separate machine, and we grab the game image by using a generic HDMI capture device. This is then processed by GoCV with a ton of techniques that I copied online and all of the information necessary for the algorithm is extracted.

Note: if you want to reproduce this, use a USB 3 capture device. They have less compression artifacts, better framerate at higher resolutions and overall better performance. I’m using this one - it’s good enough.

Rinse and repeat. That’s it.

Mouse movement calculation

As we discussed before, we don’t have the mouse as a tablet anymore. This means we have to calculate the movement relative to current mouse and target positions. The problem here is that this is definitely not linear, as it depends a lot on the frequency of the reports, mouse acceleration settings, and also just plain old OS behavior. I’ve tried to map delta X/Y to movement on the screen and was unable to.

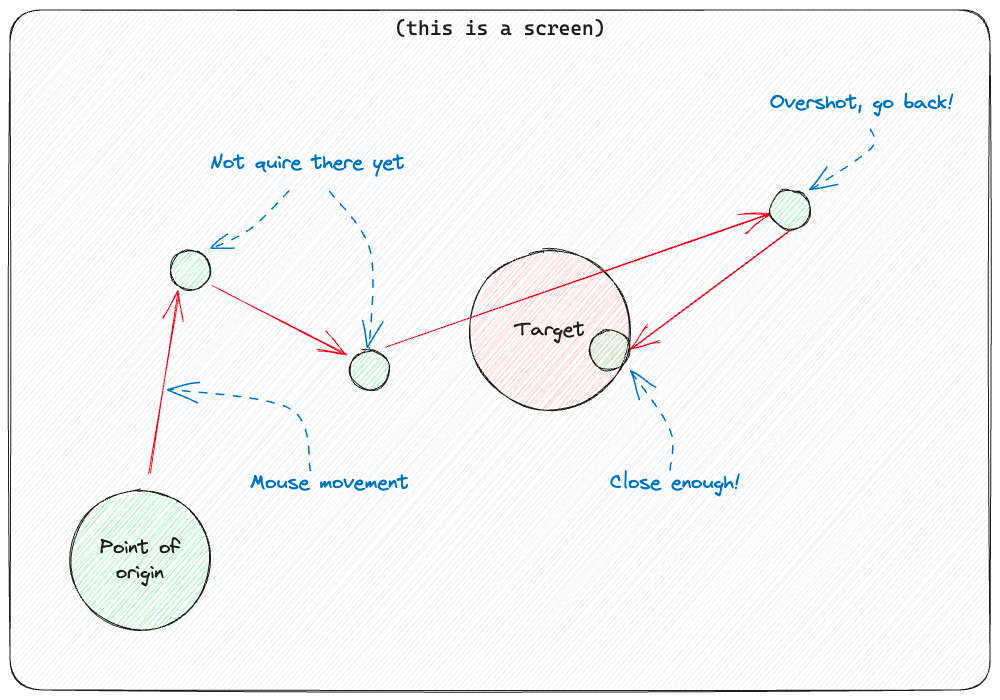

There are multiple ways of solving this problem, including some crazy stuff with machine learning, which is definitely something worth tackling at some moment. For now, we just do what humans do: try, fail, repeat. The further we are from the target, the faster we move. If we overshoot it, just go back slower. Simple as that. It’s like a 5-year-old using a mouse for the first time, but worse!

The actual implementation doesn’t really overshoot often as it slows down the closer we get, but you get the idea.

To figure out how far we’re from the target, we calculate the distance between the points and work from there. Something like this:

The question is: how well does it work? It works as terrible as you expect for a weekend of coding, but not as bad as it sounds!

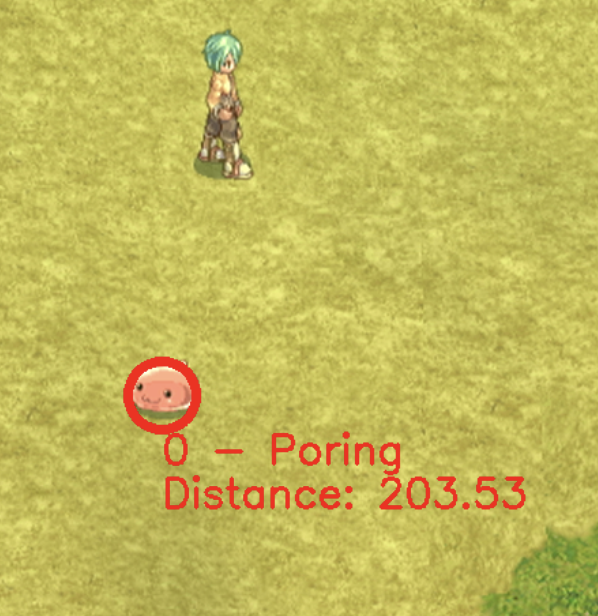

Ragnarok demo

I wondered if I still could get it to work with Ragnarok, just like last time. This is the game that started all of this, and it has an anti-cheat software that I’ve been exploiting through all of this testing for months now. The major complexity of it is finding the mobs, as the colors are kinda crazy sometimes and my code for finding them is pretty bad (I’m looking for colors instead of shapes as shapes are quite slow to detect).

Before we go, here’s a reminder: we’re now controlling the mouse as a relative pointing device, meaning that our mouse is using that human-like-that-definitely-looks-like-a-bot approximation technique. We don’t know the cursor position, so on every frame we check where it is and decide what to do from there. Here’s the demo video:

At the end of the video, you can see the cursor tracker getting lost and just thinking an item is the cursor. This is because I’m tracking it with colors as I’ve said before, so… yeah, it’s broken. But technically this is enough for me to consider this as a viable technique for cheating on this game.

Note that the game was running with the anti-cheat enabled. There were dozens of tests on that game on the span of multiple weeks and still no ban. Nice!

Aim Trainer demo

This time I also introduced a new “game”: Aim Trainer. There are multiple reasons for it, but the main one is the need of something simpler to experiment with, just to validate the concept. Ragnarok takes a while to find the mods, has interference from other players and has too many variables involved. Aim Trainer is simple.

Note that Aim Trainer has no anti-cheat. This is intentional, as I’m not worried with this at the moment - Ragnarok already demonstrates that it bypasses such tools.

So, how well it works? Well, it went actually better than I expected! Here’s the video of it, with the final algorithm implement:

On the Aim Trainer you can see both the terminal showing the next USB reports (as we discussed earlier), as well as game running through the captured video. You can also see the cursor (green lines) moving in the direction of the target (marked in red as selected), clicking on it (which makes it disappear), and moving to the next one.

Quick disclaimer: the game was indeed manually restarted once that run was set to game over, but that was the only time I touched the target’s peripherals.

The second run was actually pretty good, with 17 hits. That’s impressive for something I coded mostly on a Saturday night!

Conclusions

Ok, so what did we achieve here?

- We created a tool that allows for easier emulation of HID devices over the network

- We created an algorithm for controlling such HID devices

- We tested it against multiple games (still surprised by how good it was on Aim Trainer lol)

- And we continued to bypass an actual anti-cheat tool (on the Ragnarok test)

The conclusions are honestly the same as before: we have a technique that is harder to detect, but still doable through very simple pattern detections. For example, on the current implementation of the algorithm, the code doesn’t add jitter/error margin to the mouse movement, and this shows the input device is likely not being controlled by a human - so this would be a very simple way of blocking my bot.

However, with all of this said, both on this and the previous parts, I need to say that the whole goal of this research is not to directly break anti-cheat tools for the sake of hacking the games, but to bring attention to developers of such tools that there are some untouched fields that can (and eventually will) be used for cheating. If anything, one of the coolest lessons you can take from all of this so far is that you can’t trust the software if you can’t trust the hardware.

Now what?

Well, there are some another branches of this research that I’ll try to explore on future parts, but no promises yet. I’ll be back with this topic eventually!

And remember kids: don’t cheat! But if you do, don’t get caught!